How to Use A/B Testing to Optimize Conversion Rate for Your Store

If you click to purchase a product or service based on our independent recommendations and impartial reviews, we may receive a commission. Learn more

Although using a website builder makes creating an online store simple, it’s still difficult to hit on a winning combination on your first go. There’s always room for improvement and it’s important to continue to iterate on your website’s design to try and boost conversions as much as possible.

But how do you know if any changes you make are going to be better than your original design? That’s where A/B testing comes in. It can help you trial new ideas risk-free, and give you an insight into what your customers do and don’t like. By implementing A/B testing as part of your ecommerce conversion optimization strategy, you can make data-driven decisions to enhance your website’s performance.

In this article, we’re going to take a dive into this practice. We’ll give you a better idea of what it is, why it’s great, and how to do it. Ready?

Introduction to A/B testing

A/B testing, also known as split testing or bucket testing, is a way of comparing two different versions of a web page or a social media strategy – or anything, really – to see which one your audience likes best.

A/B tests allow you to figure out which elements of your website lead to an increase in conversion rate, what your audience skips over, what content they find the most important, and even what their visual and audio preferences are.

There are a ton of benefits to using A/B testing:

- You get higher conversion rates which means more money for you

- You can make decisions based on actual user behavior rather than guessing

- You can divide your audience down into segments which will help you reach them more individually

But what’s involved in an A/B test? Well, it always uses a control (which is the original version you’re testing against) and what’s called a variant (which is the new version).

Your audience users are randomly assigned to either version, then their actions are tracked to determine which version yields better results.

There are misconceptions about A/B testing. Some people think it’s a waste of time, or too difficult to do. Others see it as something that only big companies with massive marketing budgets can carry out.

But that’s not true. A/B testing can be simple, affordable, and accessible for businesses of all sizes. And when done right, it’s always worth it.

Setting Up an A/B Testing Framework

Before you start your A/B test, you need to figure out what you want to achieve from the test. Start by listing your goals and objectives. What type of information are you hoping to get? Is there a particular outcome you’re focusing on? For example, do you want better sales or are you trying to minimize cart abandonment? Be specific and set measurable targets.

Then you can choose the KPIs (key performance indicators) that will help you measure the success of your A/B tests. These could include conversion rate, average order value, or bounce rate, depending on your goals.

There are lots of tools and software out there to help you carry out your A/B testing. Some of them are free, like Google Optimize, but you’ll need to pay for others such as Optimizely and VWO. Choose the right tools and platform that fit your budget and needs.

Once you’ve decided on a platform, you can establish a testing schedule and timeline to make sure that you’re running everything consistently.

Planning, Designing and Conducting A/B Tests

Start by checking out your website performance as it is to establish your baseline. Then you’ll know how much change your A/B tests bring which will help you shape what to do in the future.

Next, you’ll need to identify website elements that can influence your conversion rate. These elements can be anything from headlines to calls-to-action, and color schemes. It can be really useful to run a CRO audit at this point, to help pinpoint specific areas that could use some improvement.

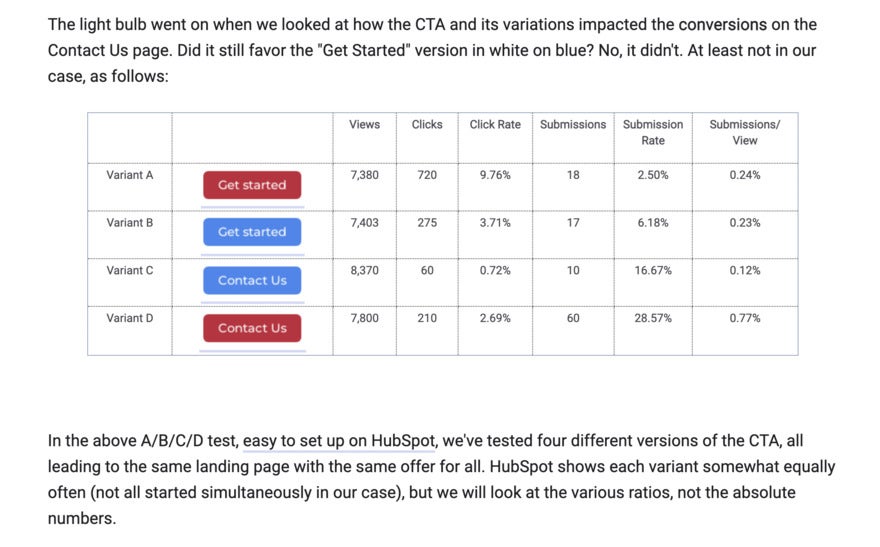

For each element you plan to test, formulate a hypothesis. A hypothesis is an educated guess of the outcome. For example, you might hypothesize that, “Changing the CTA button from blue to green will increase click-through rate because green is linked to financial success.”

Next, you need to decide your sample size. This might be a bit tricky if you have a smaller audience, especially because, if you want to make sure your test results give good statistical information, we’d recommend using a larger sample size of at least 1,000 members.

If you have fewer than that, you can still do the A/B testing, but the results might not be as statistically meaningful.

After you’ve decided on your sample size, the next step is to construct the variants that you’ll be testing against the control. Be sure to only change one element at a time – if you test more than one, you’ll never know which was the element that influenced your users’ behavior.

Your A/B testing tool will then randomly assign users to the control or variant group. And if your audience is big enough, you can choose to segment your users using demographics (like age, income or gender), location, or device to gain an even deeper insight into their behavior.

Next, set up your A/B tests on your chosen platform. Make sure you monitor the tests carefully so you can be certain they’re running smoothly and capturing data.

You can track user behavior on both versions, through the lens of your KPIs. This will allow you to see how users are interacting with each version and if the new element is having the impact you want.

Before drawing conclusions from your test results, make sure they’re valid and statistically significant. There are lots of factors that can influence validity.

For example, you might find a variant of your website color does really well. However, there are certain outside factors that might have influenced that – like novelty effect, the time of day, or the weather season. Checking really makes a difference!

Test validity is important because it helps you avoid decisions based on misleading or inconclusive data.

Analyzing A/B Test Results

Once you’ve gathered your data, it’s time to analyze it so that you can work out what it means for your website going forward. Below are some best practices for analyzing the test results:

- Gathering and organizing data – Once your test has run, gather and organize the data using spreadsheets or data visualization tools to help you make sense of the numbers.

- Statistical analysis and significance testing – Perform statistical analysis on your data, comparing how each group performed. Are there big differences? Is there a clear winner?

- Interpreting results and identifying winning variants – If a variant does better than the control (and you’ve ruled out external factors), it’s time to put that variant onto the website. If not, use that insight for future A/B tests.

- Identifying insights and actionable recommendations – You’ll be qualifying success against a specific KPI, but do your results indicate positives for any other metrics? For example, did users react faster or stay on the site longer? Your results can offer a ton of insights that can help generate ideas for future A/B tests.

Implementing Successful A/B Test Variations

You’ve made it through the A/B testing and now implementation is where it’s at!

Remember that A/B testing is a continuous journey – it’s not a one-off. So consider the results to have an expiration date. Continue to run iterative (small-scale) tests so that you give your content and website the best chance to please your users.

When it’s time to make the changes, be strategic about where you focus. High-impact and low-effort choices should come first.

Once your A/B tests are finished and you’ve identified the winners and implemented them on your website, make sure you keep an eye on your site’s performance. Are these improvements still having the desired effect? If they are, keep going! If not, you may have to make some adjustments or run another round of A/B tests.

When you do get the amazing feeling of finding a winning variation, maximize! Scale those successful changes and roll them out across other areas of your website, content, and marketing campaigns.

Continuous A/B Testing and Iteration

If you want your online store’s website (and social media accounts) to do well, continuous testing and optimization will help. When you encourage yourself and your team to look for ways to improve and refine your website, you give yourself an advantage over your competitors.

Try different strategies for ongoing A/B testing including:

- testing different user segments

- personalizing content

- leveraging customer feedback to help shape new tests.

Advanced A/B Testing Techniques

If you’re very adventurous and want to graduate to advanced testing, here are a few things to consider:

Multivariate testing is an advanced technique that tests multiple variables at the same time. This can help you find out about the best combination of elements that your users love. Beware though – if it isn’t done right, it can further confuse everything.

Personalization involves tailoring your website content to specific user segments. It can be combined with targeted A/B testing which can help you optimize your personalized content for even better results.

For example, you might be interested in how your customers in Canada differ in preferences from your customers in the US. Do they come on at different times of the day? Do they prefer different product descriptions? Do they want different price points? Targeted testing helps with that.

Cross-device and cross-channel A/B testing will help you figure out things like how differently your users act on laptops versus phones. Audiences use different devices and channels, so testing will help you give a seamless, consistent experience across all of them.

Avoiding Common A/B Testing Pitfalls

As with everything, there are some pitfalls to avoid when carrying out A/B tests:

- Look out for potential biases in your testing, such as selection bias (testing specific segments over others) or confirmation bias (testing to prove something you already think is the truth). Take steps to make sure your tests are as objective as possible – otherwise, your data will be skewed and could hinder, rather than help, your goal.

- Make sure your tests are valid and statistically significant before you draw any conclusions that you act on. This will help you avoid making decisions based on misleading results or false positives.

- Understand there are some challenges and limitations of A/B testing. For example, there is the potential for inconclusive results or external factors that could affect your outcomes. You might need to adapt and alter how you approach your testing.

Summary

When done right, A/B testing can be a very powerful tool to optimize your online store’s conversion rate and empower you to make data-driven decisions that help you get ahead of the competition.

By following this guide, you’ll be in a better place to start experimenting and refining your website for maximum success. Let us know how you get on in the comments below!

Leave a comment